BC.Game game download App

Welcome to our comprehensive guide to the BC.Game Casino App! In this article, we will provide you with all the information you need to know about the app, including how to download it, what features it offers and which games are available. We will also take a look at the security and licensing of BC.Game, as well as its mobile bonuses and banking options. So whether you’re a new player or just curious about BC.Game, read on for everything you need to know!

BC.Game application: All information about the application is here

BC.Game is a casino app that can be downloaded for both iOS and Android devices. The app offers a wide range of features, including a live dealer casino, slots, table games, video poker and more. There are also many mobile-exclusive bonuses and promotions available, as well as a loyalty program.

Casino very safe and secure, so you can be sure that your personal and financial information is always safe.

In terms of mobile bonuses, BC.Game offers a 100% match bonus on your first deposit up to $100. There are also many other promotions available, including reload bonuses, cashback offers

So now that we’ve covered all the basics, let’s take a closer look at what BC.Game has to offer!

Mobile games and casino software

The mobile games in BC.Game are powered by a number of different software providers, including NetEnt, Microgaming, Play’n GO and more. This means that you can expect to find a wide range of popular slots and table games, as well as some lesser-known titles.

In terms of slots, there are many classic and video slots available, such as Starburst, Gonzo’s Quest and Game of Thrones. There are also progressive jackpot slots on offer, with prizes that can reach into the millions.

If you’re a fan of table games, you’ll be pleased to know that BC.Game offers a wide selection, including blackjack, roulette, baccarat and poker. There are also many live dealer games available, which is perfect if you’re looking for a more realistic casino experience.

Finally, there is a good selection of video poker games on offer, as well as some other specialty games.

So whatever your preference, you’re sure to find a game that suits you at BC.Game!

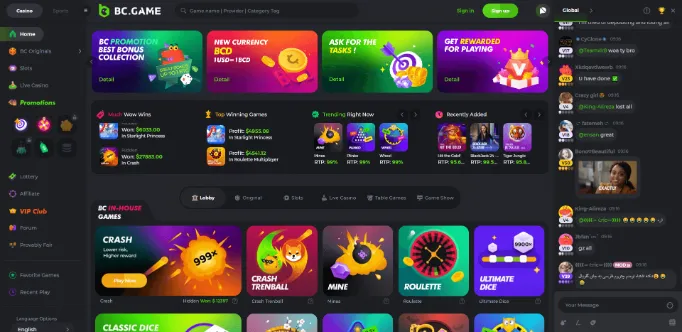

Usability and Design

Now let’s take a look at the usability and design of BC.Game. The app has a modern and user-friendly interface that is easy to navigate. The lobby is well organised and you can filter the games by type, provider or popularity.

The games themselves are also well designed and run smoothly on both iOS and Android devices. You can also choose to play for free or real money, which is perfect if you want to try out a new game before risking your own cash.

So in terms of usability and design, BC.Game gets top marks!

BC.Game Casino App Security and Licensing

BC.Game is licensed by the Malta Gaming Authority and the UK Gambling Commission, which are two of the most reputable licensing bodies in the industry. This means you can rest assured that BC.Game is a secure and trusted gaming environment.

BC.Game uses the most up-to-date SSL encryption technology to safeguard your personal and financial information, ensuring that it is always safe and secure. This ensures that your data is always secure, allowing you to play with confidence.

BC.Game Casino App Mobile Bonuses

As we mentioned earlier, BC.Game offers a 100% match bonus on your first deposit up to $100. There are also many other promotions available, including reload bonuses, cashback offers and more.

For example, the ‘Game of the Week’ promotion gives you the chance to earn double loyalty points when you play selected slots. There is also a ‘Live Casino Cashback’ offer, which gives you up to $100 cashback every week.

So whatever your preference, there’s sure to be a mobile bonus that’s perfect for you!

BC.Game Casino App Mobile Banking

BC.Game offers a wide range of mobile banking options, including credit and debit cards, e-wallets and more.

Deposits are instant and there is no fee charged by BC.Game. Withdrawals are also processed quickly and efficiently, with most payments being processed within 24 hours.

So whatever your preferred banking method, you’re sure to find an option that suits you at BC.Game!

Conclusion on BC.Game App

In conclusion, BC.Game is a great choice for anyone looking for a trusted and secure mobile casino experience. With a wide range of games, plenty of mobile bonuses and a user-friendly interface, it’s easy to see why BC.Game is one of the most popular mobile casinos around!

If you’re looking for a mobile casino that offers everything you need, then look no further than BC.Game!

Frequently asked questions about the BC.Game app

1.) How do I get the BC.Game app for iOS?

The BC.Game app is available to download for free from the App Store. Simply search for ‘BC.Game’ in the App Store and click ‘Download’. The app is compatible with iOS 11.0 or later.

2.) How can I get the BC.Game app for Android?

The BC.Game app is available to download for free from official BC.Game website. Simply visit the website and click on the ‘Download for Android’ button. The app is compatible with Android devices running version 6.0 or later.

3.) What features does BC.Game offer?

BC.Game offers a wide range of features, including a large selection of games, exclusive of mobile bonuses and a super user-friendly design.

4.) What is the range of games in the BC.Game application?

BC.Game offers more than 500 games from a wide range of different providers. There is something to suit everyone’s taste, whether you’re a fan of slots, table games or live casino!